Commodore’s bankruptcy left broken the hearts of many fans of that wonderful hardware and software platform that had delighted them for so many years: the Amiga.

Groups of enthusiasts and even the companies that took over the old one tried to fill the void, even proposing new hardware on which to run the OS or a rewrite of it.

The oldest project (started just a year later) in this sense is represented by AROS (initially an acronym for Amiga Replacement Operating System. It later became AROS Research Operating System), whose aim was (and still is) to offer a reimplementation of the API of the Amiga OS in version 3.1 (Commodore’s latest), which would be compatible at source level for all hardware platforms and also at binary level with the original machines only (based on Motorola 68000 family processors).

The second in order of time was MorphOS, also developed by a group of enthusiasts (for machines based on PowerPC processors), who also “borrowed” some components from AROS, but compared to the latter it remains mainly closed (the sources of the internally developed components are not in the public domain).

The latest arrival is AmigaOS 4, whose development was commissioned by the company that had taken over the remaining assets of the former Commodore at the time, with the aim of porting the original OS to machines also equipped with PowerPC processors.

All three platforms (but the hardware, compared to the original machines, obviously changed. Except for AROS, which manages to run on those too) were born, therefore, to give a sort of continuity line to what the Amiga represented, providing a very similar environment to the users who still remained very attached to it and who distributed themselves, more or less, in these three factions.

Although each brought improvements to the original OS (which, however, in the meantime also received updates, new versions, and several quite important innovations also from third parties), it has to be said that, unfortunately, they also brought with them major problems that were never solved.

Memory protection

The most important of which certainly concerns memory protection. If MacOS was made famous by the infamous “bombs“:

Windows from “blue screens“:

and Unix is taken by “panic attacks“:

the Amiga OS bequeathed us the famous “Guru Meditations“:

which many times originated from the total lack of memory protection in this OS.

Any application, in fact, could easily access any memory area, either of other applications or of the OS (more on this below), with the problems that one can easily imagine.

It has to be said that the Amiga’s OS was in very good company, because it was a common problem for all “mainstream” OSes of the time: DOS, MacOS, GEM (Atari ST), Windows, all worked exactly the same way, and have done for a long time (except for Windows, which already benefited from the protected mode from the second version. Obviously only for processors that supported it).

Some of the “post-Amiga” OSs (I prefer to call them that instead of “NG”) offer some mild protection, intercepting write accesses to the portions of memory where code has been loaded, but this is little compared to the general problem that remains.

It must be said that more cannot be done, if the objective is to preserve wide compatibility with existing applications for the Amiga OS, since the latter is heavily based on the message passing mechanism, which in this platform simply means sharing their memory area.

Implementing complete memory protection is, therefore, impossible, and would require a drastic change that would shake the OS to its foundations and which, evidently, the designers of these OSes did not wish to tackle.

Resources tracking

Another pain that has been dragged along since the early days, and which is intimately linked to what is written above, is that of resources management, referring by this to the entities (files, folders, devices, memory, screens, windows, etc.) made available by the OS, which are requested by applications.

Taking the simplest example, that of opening a file:

#include <stdio.h>

#include <stdlib.h>

int main(void)

{

FILE* fp = fopen("example.txt", "w+");

if (!fp) {

perror("File opening failed");

return EXIT_FAILURE;

}

fputs("Hello, world!\n", fp);

fclose(fp);

return EXIT_SUCCESS;

}

problems arise if, for some reason, the file is not closed correctly by the application (via the fclose function) on its exit, because the file is locked until the system is restarted.

A well-structured OS, on the other hand, takes care of all these cases, as it keeps track of all the resources it has been requested and allocated for each application, freeing these resources automatically if their execution ends (naturally or brutally).

A post-Amiga OS does not have this automatism, as it does not know if a given resource has been shared with other applications, for instance. Or if perhaps the application has installed a so-called hook to control parts of the system, in which case even freeing up memory that had been allocated to load the application’s code could lead to disasters.

The problem, however, is that if the application has not properly freed all its resources before its exit, they will remain in a kind of “limbo”: unused, but still weighing on the system. This is what in jargon is called a leak.

It is easy to imagine that, in the long run, the accumulation of leaks over time will lead to the system becoming unusable, requiring it to be restarted in order to start again from a completely free configuration and with all the resources available.

Process and thread concept

Linked in part to the two previous points is the concept of process and thread (and also fiber in more modern OSes). When an application starts up, it is associated with a process (and in some systems also a thread, also called the main thread) and thus an address space where code and data reside.

A process runs in an address space isolated from all other processes, but shares it with all the threads (if any) it creates (to simplify, let us say that threads normally differ only in the stack used for their execution, and possibly their private data).

The idea is, therefore, twofold: on the one hand to isolate memory completely in order to avoid crashes due to incorrect operation, and on the other to share resources. All of this, of course, in a solid and well-controlled manner (which, let’s be clear, does not automatically protect against problems).

Post-Amiga systems offer nothing more than the original: there is only one process running in the system, therefore with only one address space which is shared by all the threads (which in Amiga jargon are called tasks).

A big mishmash, in short, which makes the system extremely fragile and makes it unsuitable for more “mainstream” use.

Multicore

An extraordinary feature (for the time) and one that was perhaps THE strength of the Amiga was that of the integrated coprocessors, Blitter and Copper, to which the CPU could offload quite a bit of work, as they were much faster at completing certain tasks.

Modern computers have long been supported by such devices that perform various tasks (not only graphics, but also video playback & conversion, audio, USB, “disks/storage”, etc., to which AI has recently been added), but for several years now, the CPU has also been “doubled”, leading to so-called multicore systems (CPUs integrating tens or even hundreds of cores).

Mainstream’ OSs seized the opportunity fairly quickly to exploit more computing power from the number of processors installed in a system, mostly using them in SMP mode (instead of AMP or BMP).

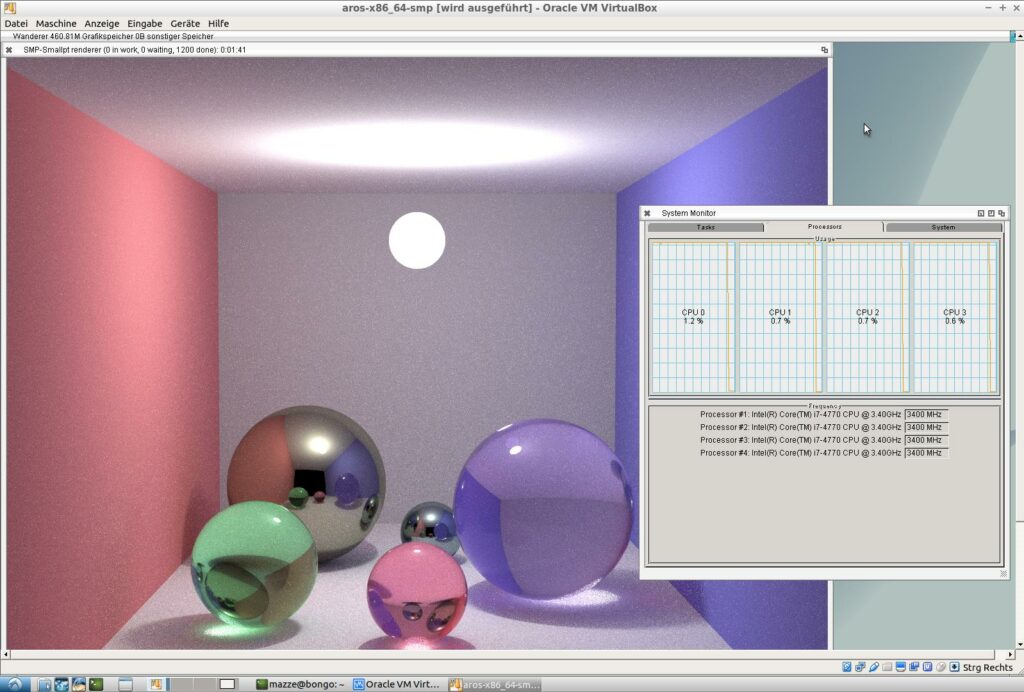

This, unfortunately, did not happen with post-Amiga systems, apart from an experiment by the developers of AROS, who introduced SMP:

Unfortunately, it is not stable at the moment and, furthermore, it is not fully compatible with the OS environment (also version 3.1). So, at least for now, it remains an exercise.

On the other hand, and the way the Amiga’s OS works, there is not much that can be done: there are intrinsic problems whose resolution should involve rethinking some parts (in a way incompatible with existing software) and, at the same time, modifying the applications that use them.

64 bits

What is not an experiment and has become, instead, a concrete and usable version is the 64-bit support, which AROS introduced with a port for the x64 architecture (the same and only one for which SMP was implemented and works), which finally made it possible to overcome the 2GB barrier of the original s.o. and to be able to exploit several hundred.

In this case it was simpler, since AROS, on a target level, aims to be compatible only at source level with Amiga applications when it has to run on architectures other than the original (while the aim is to be compatible at binary level for Motorola 68000 processors), so a recompilation should suffice to obtain binaries for AROS/x64.

Of course, this is all assuming that the applications are well-written and thus make no assumptions about the size of certain data types (pointers in particular). Well-written applications, yes, but that should be the norm (although reality has often shown us otherwise).

Other post-Amiga OSes offer nothing of the sort, and are limited to 1 or 2GB of addressable memory (depending on their implementation), more or less closely following the Amiga OS’s limitations.

It has to be said that up to 4GB of memory could have been addressed, were it not that the designers of the original had sinned by an excessive (as well as totally misplaced) mania for extreme optimisation, using the most significant bit of the 32-bit pointers to signal errors.

The example par excellence is the AllocEntry() API:

RESULTS

memList -- A different MemList filled in with the actual memory

allocated in the me_Addr field, and their sizes in me_Length.

If enough memory cannot be obtained, then the requirements of

the allocation that failed is returned and bit 31 is set.

WARNING: The result is unusual! Bit 31 indicates faulure.

Since the function returns a pointer, a far better solution would have been to use bit 0 (which can never be 1, when allocating memory) to signal a problem, and then shift the result one place to the right and check the type of memory that caused the request to fail.

In any case, you can’t unscramble scrambled eggs, and those heavy limitations remain, to try to mitigate them, one of the other two post-Amiga OSes introduced a mechanism very similar to the bank switching (in vogue in 8-bit systems, around the first half of the 80’s), which involves reserving areas of memory for applications that require it, to which it then systematically maps (according to the applications’ requests) physical memory that is beyond the normally addressable address space.

This is an ugly patch, in short, to an issue that has been dragging on for a very long time, and which will probably turn the noses of the many amateur enthusiasts who used to make fun of PCs for using segmented memory (while their machines allowed all memory to be addressed linearly and directly).

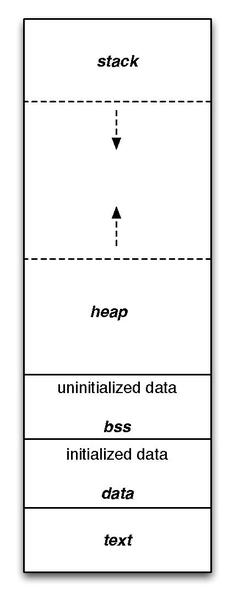

The Stack

Another tough nut to crack, which has never found any solution, is that of stack management. Every application needs a portion of memory dedicated to the stack, where local variables of functions and return addresses are stored when calls are made:

The nature of the stack is, therefore, extremely dynamic, and the pointer to its top (called, in jargon, the stack pointer) moves continuously depending on the depth of function/method calls, and only at the start of an application is it obviously positioned at the highest possible address.

The fact that it is a dynamic structure is great for the type of use and operation that is made of it, but it is also a double-edged sword in the event that execution gets out of control and, for instance, the processor starts using memory higher up than the initial position of the stack.

This is a fairly rare case, while much more frequent and easier to happen is the opposite case, i.e. that too much memory is consumed on the stack by going very low in memory locations, which can happen if a program makes too much use of recursion, for example.

A modern s.o. is able to intercept the first case and block the application, while in the second case it can extend the stack memory, adding memory at the bottom, when it realises that the application has used more than was initially allocated for it.

This is a fairly quick and simple operation for an OS to implement if we consider how an application’s memory is generally mapped:

The big problem with the Amiga is that, unfortunately, it cannot use a model similar to this, having the entire address space shared by all tasks/threads and also by the OS. Therefore, when an application is loaded by the latter, its memory blocks are allocated arbitrarily wherever it happens to be.

For this reason, every application has to to specify how much memory needs to be allocated for its stack, so that it can run properly, but this is still a piece of information that is set by the developer based on what he thinks the consumption of the stack might be.

Unfortunately, one can easily guess what big problems can occur in this case. The first is that it is not possible to increase the size of the stack if the amount of allocated memory is exceeded.

The second, but always linked to the first one, is that the processor will quietly continue its execution as if nothing had happened, starting to use the memory below the allocated one, which, however, could already be used for anything else (other applications or even the OS), with disastrous results (and sometimes very difficult to detect, since it is often not easy to realise that there are problems and, above all, that they are caused precisely by the insufficiency of the stack).

Access to OS structures (and lack of abstraction)

On the other hand, this is a consequence of the fact that the Amiga OS has essentially no form of protection and that access to and use of memory is “open” to anyone, even with regard to the structures of the OS itself.

These structures are, in fact, public. Their specifications and details have been published by Commodore and made available to developers, with some cases in which certain details (e.g. the functioning of the task scheduler) have not been published and, therefore, reserved for internal use only.

The most telling example is the Exec library, which is the heart of the entire OS and whose details of its main structure are entirely visible and accessible (e.g. here). Some fields have been marked as private, but are still used by applications lacking APIs for certain purposes (e.g. there are none for knowing the list of running tasks).

Obviously, the problem does not only concern Exec, but is endemic to the entire platform: the lack of appropriate APIs and/or the publication of a lot of details on the internal structures of the OS has been the main cause of the blocking of its evolution.

To give a practical example, let us look at the API that is used to open a window:

NAME

OpenWindow -- Open an Intuition window.

SYNOPSIS

Window = OpenWindow( NewWindow )

D0 A0

struct Window *OpenWindow( struct NewWindow * );

On the surface, it is very simple, because all details (attributes and various structures) are hidden within the single parameter of type NewWindow. The latter, however, contains several elements as well as pointers to other data structures, the owner of which is not known, nor is it known whether these structures have been shared with other applications (which is very important for the resource management issues mentioned earlier).

However, the most important aspect is represented by the value returned by this function, which is a pointer to the structure of type Window. The OS has, therefore, shared with the application the internal structure it uses to manage windows.

Let us now see how a well-designed OS allows a window to be opened without, however, releasing information on the internal details. For example, with Windows 1.0 (released the same year as the Amiga: 1985), which uses the famous WIN16 API (that was released as an ECMA standard):

HWND CreateWindow(LPCSTR lpszClassName, LPCSTR lpszWindowName, DWORD dwStyle,

int x, int y, int nWidth, int nHeight, HWND hWndParent, HMENU hMenu,

HINSTANCE hInstance, LPVOID lpCreateParams);

27.2 Description

The CreateWindow() and CreateWindowEx() functions are used to make a window. CreateWindowEx() differs from CreateWindow() by one extra parameter - extended window style.

As may be noted, part of the data relating to the resources used (“parent” window, menu, etc.), as well as the value returned by the function itself, are abstract (“opaque”) objects, called handles in jargon, which do not contain any information or details concerning their internal structures.

In particular, it should be emphasised that any resource used is represented and managed by the OS always and only as a handle and not directly as a pointer to the structure of which public information is available.

This forces developers to use special APIs in order to request information of a certain type (getter, in jargon) or to request its change (setter, again in jargon):

WORD GetClassWord(HWND hWnd, int nIndex); WORD SetClassWord(HWND hWnd, int nIndex, WORD wNewWord); 24.2 Description The GetClassWord() and SetClassWord() functions retrieve and set a word value at the specified offset from extra memory associated with the class associated with the specified window.

Everything therefore takes place in an absolutely controlled (as well as “opaque”) manner by the OS, without the application being able to access internal details or even change them as it wishes, contrary to what unfortunately happens with the Amiga’s OS (and “successors”).

Kernel/supervisor mode… and more

The extreme freedom offered by the OS can also be seen in another aspect that is not insignificant and which does not immediately stand out: that of being able to easily switch from user mode to supervisor/kernel mode (and vice versa, but… only if you want to!).

In fact, Exec provides special APIs for this purpose, SuperState() and UserState(), which allow you to switch from one to the other. There is no point in pointing out the extreme danger of this, when we are well aware of how much modern OSes strive to isolate the two worlds as much as possible and to regulate the transition from one to the other in an ironclad manner.

In the same vein is the presence of APIs that can disable (Forbid()) and re-enable (Permit()) the multitasking, and even disable (Disable()) and re-enable (Enable()) interrupts. If switching to supervisor mode was already the stuff of tears, you can imagine how much power and damage these four APIs (which are also the main problem for SMP implementation) can do.

Unfortunately, these are situations that sometimes cannot be avoided, precisely because of the lack of other APIs to enable certain operations (getters, setters, enumerators/iterators), thus forcing applications to resort to them and, each time, seriously jeopardising the stability of the system.

One can already see how the apparent freedom offered by the Amiga’s OS hides, instead, a general shortage of APIs that are missing, either due to the carelessness of its designers, or to cope with the chronic lack of memory/resources for their implementation.

That this may be a general predisposition of the architects who conceived and then implemented it is a more than legitimate doubt, which also surfaces when one encounters other APIs, such as those relating to signals management, for example.

In fact, their documentation states that:

Before a signal can be used, it must be allocated with the AllocSignal() function. When a signal is no longer needed, it should be freed for reuse with FreeSignal(). BYTE AllocSignal( LONG signalNum ); VOID FreeSignal( LONG signalNum ); AllocSignal() marks a signal as being in use and prevents the accidental use of the same signal for more than one event. You may ask for either a specific signal number, or more commonly, you would pass -1 to request the next available signal. The state of the newly allocated signal is cleared (ready for use). Generally it is best to let the system assign you the next free signal. Of the 32 available signals, the lower 16 are reserved for system use. This leaves the upper 16 signals free for application programs to allocate. Other subsystems that you may call depend on AllocSignal().

Attention must be paid to the highlighted parts. Going, however, to read that of FreeSignal():

NAME

FreeSignal -- free a signal bit

SYNOPSIS

FreeSignal(signalNum)

D0

void FreeSignal(BYTE);

FUNCTION

This function frees a previously allocated signal bit for reuse.

This call must be performed while running in the same task in which

the signal was allocated.

there is no reference or explanation as to what might happen if, by mistake or intentionally, one were to ask to release a system signal: would the API fail or would it proceed quietly? It is not known (also because the function returns no result for the operation), but if the second possibility were the right one, it would only confirm the above assumption.

The result, in the end, is the extremely fast system that has delighted us for so many years because, lacking controls and not allocating resources in so many very common as well as frequent cases, this is the general impression that has been gained.

This does not mean that it was necessarily a good thing: perhaps it was for the time because of the scarcity of resources (although other OSes/platforms were also in the same situation), but the choices made by the engineers who worked on the platform are also those that essentially condemned it to the state in which even the post-Amiga OSes, that continue to be developed today, find themselves.

Modernity is not just about going beyond the original chipset

It must be said that many, as well as very heavy, limitations of the Amiga’s OS have been overcome over time, making it even more modern, and these are changes that have been incorporated into the post-Amiga OSes from the very beginning.

I am referring, in particular, to the total symbiosis of the OS with the chipset of the Commodore machines. Therefore, graphics, audio, and the I/O system were redesigned or made use of other libraries in order to take advantage of the technology that was “borrowed” from the archenemies PC (on the other hand, there was nothing else left to do, once the parent company was defunct).

As we all know, for better or worse, the OS APIs are basically a 1:1 mapping with the underlying hardware provided by the chipset. This made the system extremely fast and versatile in exploiting what was under the “chassis”, but also tied it hand and foot to it.

A clear example of this convoluted mentality is the structure (which should be system-only, but is obviously “public” in the Amiga OS) containing the information of a screen, which directly integrates some critical structures:

struct Screen

{

struct Screen *NextScreen;

struct Window *FirstWindow;

WORD LeftEdge, TopEdge, Width, Height;

WORD MouseY, MouseX;

UWORD Flags;

UBYTE *Title, *DefaultTitle;

BYTE BarHeight, BarVBorder, BarHBorder, MenuVBorder, MenuHBorder;

BYTE WBorTop, WBorLeft, WBorRight, WBorBottom;

struct TextAttr *Font;

struct ViewPort ViewPort;

struct RastPort RastPort;

struct BitMap BitMap;

struct Layer_Info LayerInfo;

struct Gadget *FirstGadget;

UBYTE DetailPen, BlockPen;

UWORD SaveColor0;

struct Layer *BarLayer;

UBYTE *ExtData, *UserData;

};

I am referring specifically to ViewPort, RastPort, BitMap and LayerInfo, which are not present in the form of pointers. This atrocious choice has meant that all four of these structures cannot have variations in terms of size (thus adding more fields), but must remain exactly as they are for all future versions of the OS, on pain of losing compatibility with the entire ecosystem.

This is particularly evident for BitMap:

struct BitMap { UWORD BytesPerRow; UWORD Rows; UBYTE Flags; UBYTE Depth; UWORD pad; PLANEPTR Planes[8]; };

which represents a framebuffer (the actual graphics) and which, as can sadly be seen, exactly reflects the nature of the original chipset, in which the planar graphics are stored in individual bitplanes, of which the (maximum) 8 pointers are visible.

Needless to say, such a structure does not fit in at all with the evolution of graphics subsystems, which have led us to the use of “packed/chunky” graphics, exceeding the limit of the maximum 8 bits per pixel = 256 colours and going far beyond.

The situation is even worse for audio, as there are no high-level APIs to be able to use channels to reproduce sound samples and, therefore, one has to resort to the specific device (audio.device) by issuing the appropriate commands for this purpose.

In any case, we are once again in the presence of a 1:1 mapping with the audio subsystem of the Amiga, since only the four channels available with this hardware can be used, which reproduce 8-bit sound samples (so no 16-bit or other), and finally also volume (64 levels) and frequency (about 28Khz maximum) must reflect the values to be set in the appropriate registers of the custom chip (Paula).

All these limitations were to a large extent solved thanks to hacks/designs outside the original s.o., which gave rise to technologies such as RTG for graphics and AHI for audio, which then became the de facto standard for graphics and sound cards from the PC world (first and foremost).

In a similar way, the limits of the original filesystem were overcome (stuck at 32 bits and, therefore, able to handle files of up to 4GB in length. A limit that was initially shared for the entire size of disks/mass storage media), a TCP/IP stack was introduced to connect to the Internet (and thus also browsers arrived), a USB stack, libraries to make it easier to realise graphical interfaces, etc. etc.

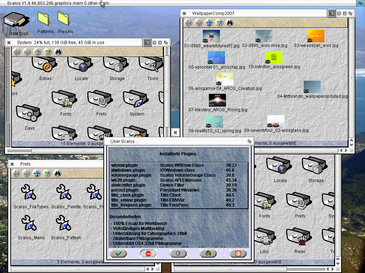

There were even complete replacements for the old desktop / file manager (the Workbench), of which Scalos is noteworthy:

and Directory Opus (later becomed Magellan):

A lot was done, in short, to clearly improve the system even without Commodore putting its hand to the Amiga project when it could or after the company had gone bankrupt, helping to make the user experience much more pleasant and easier.

Conclusions

All these innovations, however, cannot hide the inherent limitations of the system, the most relevant of which have been reported in the relative sections of the article.

It is true that the post-Amiga platforms offer much more than the original OS, but most of these changes have been brought and made to it as well, as mentioned above, and they cannot in any way hide or mitigate how extremely limited and limiting they still are today.

These are the reasons that lead me to reject the label of “Amiga NG” that has been attributed to the three post-Amiga systems I mentioned at the beginning: since the intrinsic limitations of the original platform have not disappeared, there is no reason to talk about “Next Generation”.

AROS is the only one that has solved some problems, in part, but it is still too little to be able to speak of “NG”.

The truth is that to go beyond such limitations one would have to rethink the Amiga platform entirely, getting rid of the foundations on which it is based and, thus, leading to a new system, and only inspired by the one that made us happy.

Something that, however, the current developers do not feel like doing, condemning, in effect, their products to remain forever isolated in a small niche of Hobby/ToyOS systems.