I had already mentioned this in the series of articles on the atavistic RISC vs. CISC diatribe, and now the time has come to address the issue head-on, and to put forward again, but this time in reverse, the reasons why it makes more sense than ever to turn our attention today to CISC architectures instead of RISC ones (which are in fact no longer RISCs, as has already been demonstrated).

The four pillars on which RISCs were based are exactly the reasons why it has lost its meaning to try to impose these now clearly artificial if not purely ideological/dogmatic limits on architectures and microarchitectures.

They might have been fine when the RISC concept developed and with the first processors of this macro-family, but the technological evolution and the changing needs of computer systems have dismantled these foundations one by one, as we can ascertain from the following analysis.

1. There must be a reduced set of instructions

Keeping a few instructions in an architecture might have been fine when chips were small in terms of transistors, as the available manufacturing processes allowed a limited amount to be packed into a given space, but the famous Moore’s Law proved that the problem would be solved quickly, within a few years.

At some point, therefore, the abundance of transistors led, if anything, to the question of how to use them. Certainly adding caches for code and/or data is a good way to do this, as is adding more and more complex branch predictor units, or even replenishing TLB caches, up to the point of adding more pipelines for instruction execution.

All this contributes to improving the performance of a processor, but there are cases where this is not enough. There are, in fact, specific tasks that require the execution of numerous instructions to achieve the desired result.

If the algorithm is small enough, simple enough, and has few inputs (one, two. Even three, in some cases), one can think of adding special instructions to execute it directly in hardware and obtain significant increases in the speed of program execution, as well as reducing the space occupied by the code (one instruction replaces several).

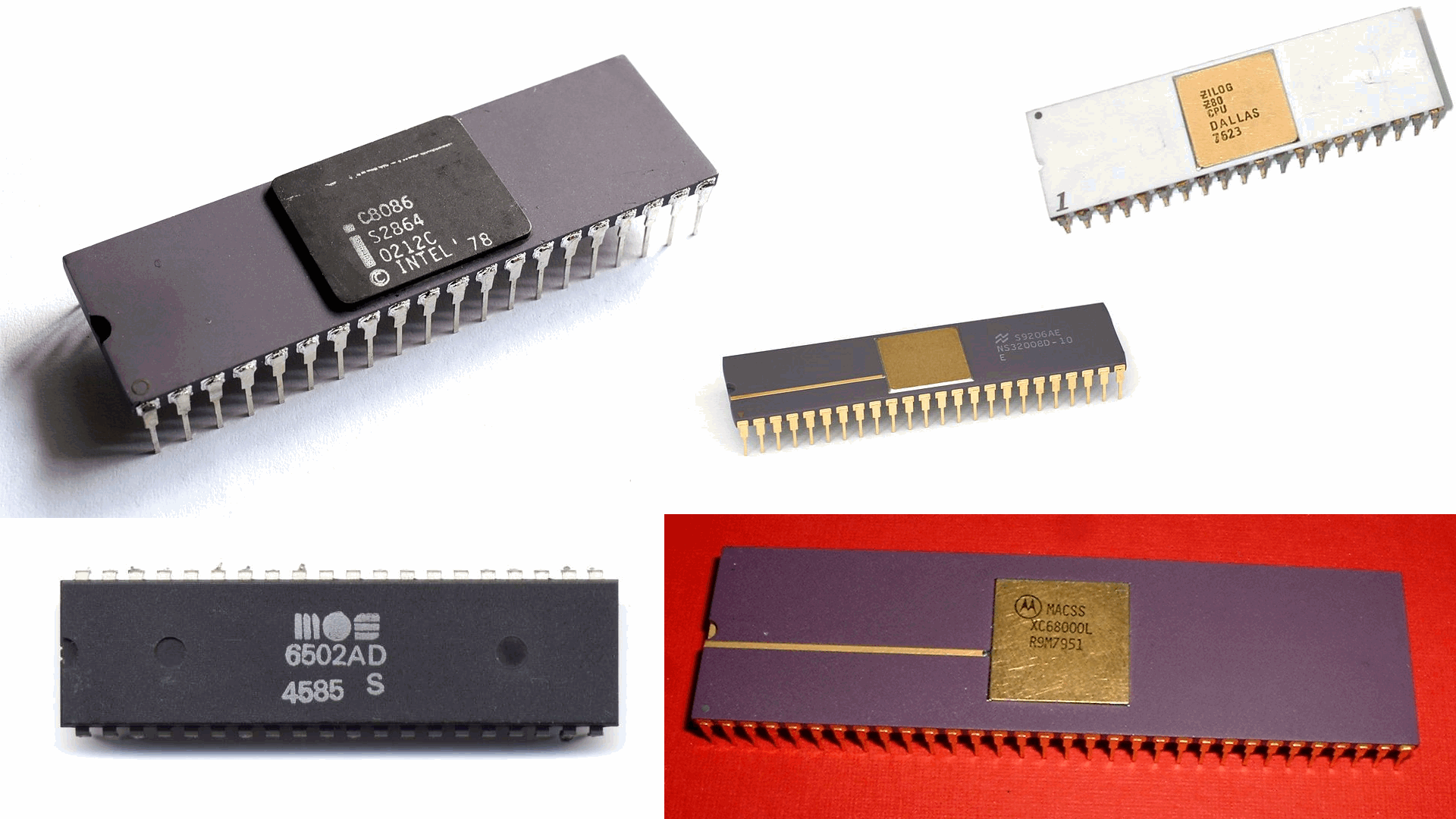

Basically, this is exactly what the first processors did, implementing in hardware operations such as calculations in BCD format, manipulation of strings/memory blocks, creation and maintenance of frames in the stack, etc. etc.

Today, any processor provides hundreds or even thousands of instructions for precisely the same reasons. There have been changes, it is true, but they only concern the type of instructions implemented in hardware, since there are other needs to be met. The need for specialised instructions, however, has never disappeared and has always been amply satisfied.

There has thus been a return of one of the typical characteristics of many (but not all) CISCs: processors with a large number of instructions in them.

3. Instructions must have a fixed length

I think it is absolutely undeniable that having fixed-length instructions simplifies their decoding, as well as their internal handling. But the other side of the coin is that the size of programs increases considerably and/or the number of instructions to be executed increases, depending on the size of the opcode.

With the exception of particular processors, such as VLIWs, RISC processors have generally had fixed instruction lengths of 16 or 32 bits. Both sizes have their merits and drawbacks, of course.

Having 16-bit opcodes greatly improves code density and, therefore, allows programs to occupy less memory space, with associated benefits in performance and power consumption for the entire memory hierarchy.

Conversely, they may use few registers and/or make few instructions available and/or require the execution of several instructions to perform a given task. This, as a consequence, negatively affects performance (and also power consumption, if more than one instruction must be executed for the same task).

In particular, it should be emphasised that limiting oneself to opcodes of only 16 bits becomes a major handicap for a modern, more general-purpose processor, since hundreds of instructions covering several uses (FPU, SIMD/vector, encryption/hashing, etc. etc.) are now integrated.

Viceversa, 32-bit opcodes do not suffer from all these problems, but are particularly detrimental in terms of code density, despite being able to use immediate values or offsets (for jumps or addressing memory) of much greater amplitude than is possible with 16-bit opcodes.

For these reasons, many processors that are passed off as RISCs (we have seen in the dedicated series that, in reality, they are not!), i.e. with L/S (Load/Store) architectures, have implemented ISAs that use 16- and 32-bit opcodes, so as to try to obtain the advantages of both, while limiting the complication of managing opcodes of different lengths (there are only two to take into account). They thus become variable-length architectures.

On the other hand, there are not many possibilities: switching to variable-length ISAs is basically the only way to achieve good code density and, at the same time, be able to rely on a more general architecture. L/S ISAs with opcodes of only 16 bits with very good code density do exist, but they are not very general, in fact (they are fine in the embedded domain, for example).

CISCs, on the other hand, had very few problems, as they were often able to rely on opcodes of variable length and not limited exclusively to 16 or 32 bits, thanks to which it was possible to specify large immediate values or offsets, or even instructions with multiple functionalities (see the RISC-V limits for vector instructions, for example, which allow only one register to be used for masking/predication, due to lack of space in 32-bit opcodes).

It is worth emphasising the importance of this, because the possibility of being able to specify large immediate values or offsets in a single instruction entails, in ISAs that do not allow this, the use of additional registers and the execution of several instructions, which are mutually dependent (and thus may introduce stalls in the pipeline), with obvious (negative) repercussions in the area of performance and code density.

The advantage of CISCs is quite obvious.

4. Instructions must be simple

Executing instructions in a single clock cycle is one of the cornerstones of RISCs, which is why they limited the ISA to rather simple instructions in order to guarantee compliance with this requirement, obviously to the absolute benefit of performance.

In fact, another reason why the use of simple instructions was imposed was that this would have enabled higher operating frequencies for processors to be achieved, again impacting (positively) on performance.

The dream of achieving high frequencies has, however, crashed against the limits of the fabrication processes and, in general, of the materials used, as working at higher frequencies has caused the chips’ power consumption to explode, raising, in fact, a performance wall in relation to this parameter.

On the other hand, limiting oneself to simple instructions meant the exclusion of many very useful operations, such as the famous ones dedicated to calculations with floating-point values, to give the most eloquent example.

This is, needless to say, a huge limitation that would have clipped the wings of any processor in a very short time and which, in fact, was very quickly put aside and ignored. Not for CISCs, of course, which have always had to deal with complicated instructions that also required several clock cycles to complete their execution.

It must also be pointed out that this dogma of faith also affects the previous two. In fact, being forced to implement only simple instructions often means avoiding variable-length instructions, which are generally more complicated.

It also means greatly limiting the set of instructions that can be implemented, as well as their usefulness, thus putting the brakes on performance.

2. Only load/store instructions can access memory

With the previous pillars out of the way, only the last totem to which the ex-RISCs (now L/S) remain firmly clinging remains: access to memory exclusively via load/store instructions. Which is basically the only feature that clearly differentiates them from CISCs (although, in reality, these could easily be L/S as well).

The reasons for this are quite obvious: relegating memory accesses only to these instructions simplifies their decoding, because only a few instructions have to be ‘intercepted’ and then routed to the appropriate pipeline.

On the other hand, non-L/S architectures may have several instructions potentially able to access memory, thus complicating their decoding (they must all be intercepted). Furthermore, some microarchitectures may decide to generate multiple micro-op for an instruction, again complicating the processor’s frontend (which must route multiple micro-ops to the backend, taking their dependency into account).

Considerations to be taken into account, no doubt, but which are perhaps tied a little too much to the times when there were only a few transistors available to implement all this, and which no longer apply or are extremely downplayed in view of the enormous number of transistors available, as well as the considerable complexity that modern processors have reached regardless.

What is artfully concealed or downplayed is the fact that having generic instructions capable of accessing memory directly also brings considerable advantages.

In fact, being forced to use only load instructions to load values for use entails four not insignificant things:

- the addition of instructions to be executed;

- the consequent worsening of code density (more instructions occupy more memory space);

- the use of a register in which to load the value before it can be used;

- the stall (of several clock cycles) in the pipeline caused by the second instruction for waiting to read the value from the register where it will be loaded (technically this is called a load-to-use penalty).

Entirely similar considerations apply in the opposite case, i.e. when a store instruction must be used to copy the result of an operation into memory (with an associated dependency on the availability of the result).

All these things do not apply in the case of CISC processors (not L/S), which can directly execute a single instruction capable of both accessing the memory and processing the data, while saving a register (which means that fewer are needed in such an ISA) and having to pay the pledge of a single clock cycle, in general, for the load-to-use (which in essence amounts to simply moving to the next stage in the pipeline).

Conclusions

I think it is quite clear by now how the breaking down of all four pillars on which RISCs were founded brings far greater benefits than the greater complexity required for their implementation.

Even the last totem that remained standing for the former RISCs, i.e. the use of load/store instructions only, has far more disadvantages than the obvious benefits that could be obtained otherwise.

It is because of all these considerations that, in my opinion, we need to seriously reconsider the introduction of CISC architectures, which may be able to achieve higher single-core/thread performance than L/S ISAs, as they are inherently capable of performing more “useful work” per instruction.

This is particularly evident with processors with simpler pipelines (in-order), where the possibility of direct addressing of memory for instructions saves valuable memory and also reduces the resulting stalls.

We must stop, therefore, continuing to demonise this macro-family of processors and restore the lustre of CISC and the rightful place it deserves in the landscape of processor architectures.

It is also for this reason that in a future series of articles I will present the CISC architecture I have been working on for the past years, which treasures all the cornerstones already illustrated in the rather stagnant landscape of modern ISAs.