As we had the opportunity to see in the previous article, one of the strengths of NEx64T is to make available a rich combination and variety of configurations in which the “base” instructions can operate, and in particular being able to directly address memory for one or (at most) two of the operands.

In this sense the new architecture fits fully into the groove of much of the CISC processors macrofamily, placing itself, therefore, diametrically opposed to the L/S (Load/Store. The former RISCs) architectures.

Having the possibility to be able to address memory by normal instructions is a huge advantage, as we have already had occasion to discuss several times, but if there are few addressing modes available then this advantage can be reduced considerably.

The legacy of x86 and x64…

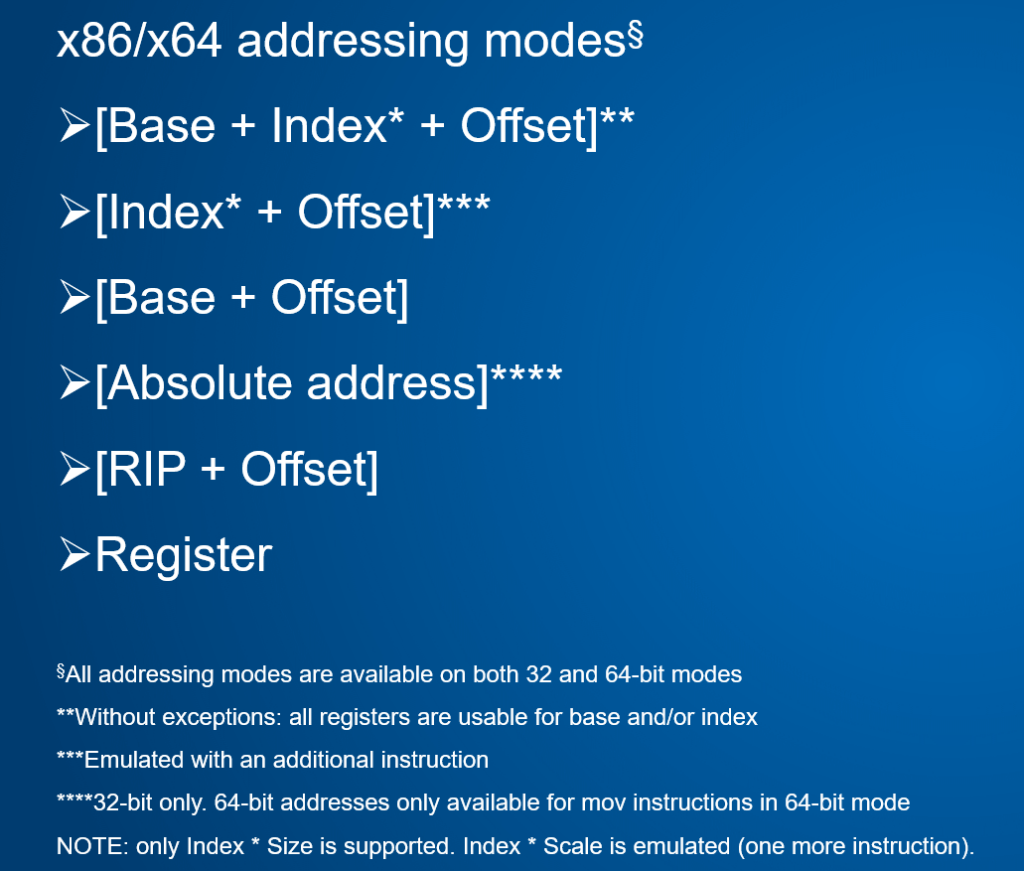

Fortunately, NEx64T was born as a deep inspiration & rewrite of x86 and x64, which already count several useful as well as common ways made available to be able to reference data directly in memory, as is shown in the following table:

NEx64T must necessarily support all these addressing modes in order to be fully compatible with both architectures at the assembly source code level, and this is indeed what occurs, but with some distinctions.

In fact, and as I anticipated in the previous article, the scaled index addressing modes (the first two shown in the table) are supported only if the scaling is the same size as the data read or written, otherwise an additional instruction (to be executed before the actual instruction) is required.

This is a precise architectural choice, in part because the space available to be able to specify the value of the scaling would have entailed sacrifices that would not have justified the lower benefits that would have resulted.

On the other hand, it is worth noting how the goal of being fully source-compatible is equally achieved, and this does not necessarily imply that the same choices should be made as for x86 and x64. This aspect will be better covered in the next article, which will deal specifically with the legacy aspect with respect to these two older architectures.

This is the only difference (purely formal, since you can emulate it safely), since the rest of the modes are supported without problems or even better. In fact x86 and x64 have problems with some modes, because they cannot use all registers, but in some configurations you cannot specify any, whereas with NEx64T you can use any register either as base or index.

Another relevant dissonance is the PC relative addressing mode (RIP), which was introduced by and is available only with x64, while x86 has only the absolute one. And vice versa, since the latter is missing in x64.

NEx64T, on the other hand, allows both to be used in both 32-bit and 64-bit modes. The only caveat: for the absolute one, only a 32-bit address can be used (thus addressing only the first 4GB of memory).

In the case of the 64-bit mode and if you wanted to read or write a value in any of the 64-bit addresses there always remains the possibility, as for x64, of using a particular MOV instruction that allows you to do so (more on this later).

…and the new features of NEx64T

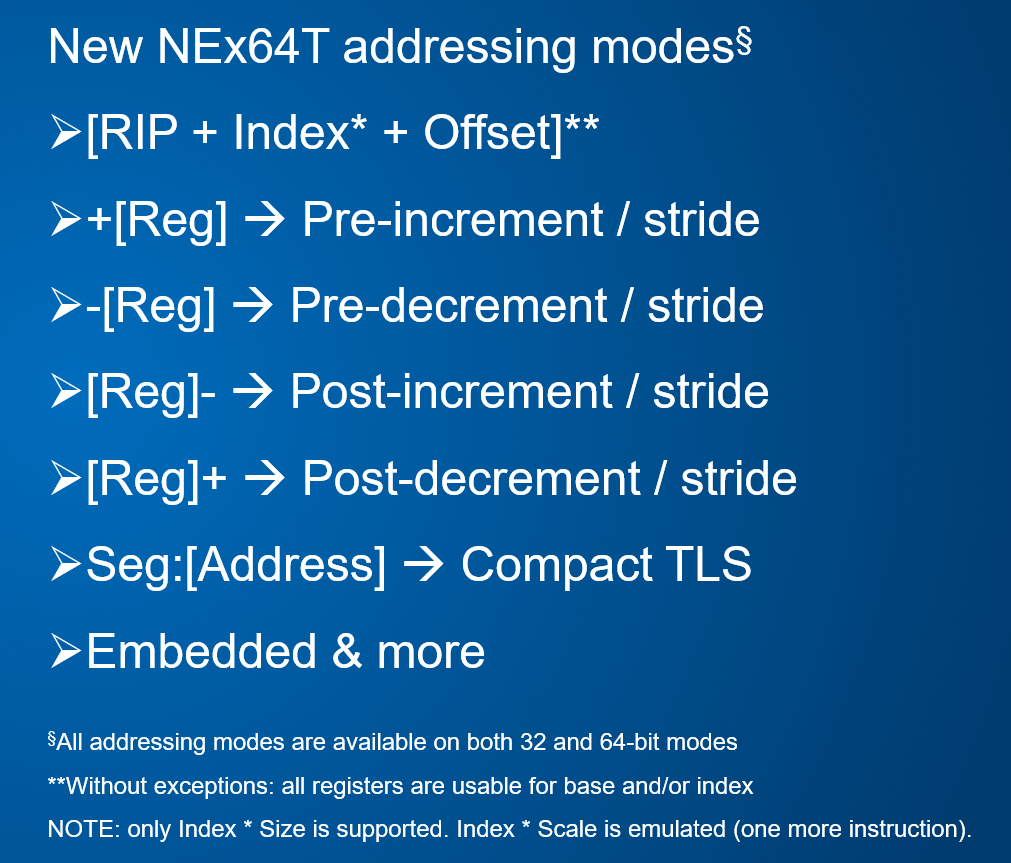

Although NEx64T already proves to be essentially a superset of its own compared to the other two architectures, the one presented was only the tip of the iceberg, as several other addressing modes have been introduced, as can be seen from this new table:

The first was “borrowed” from the glorious Motorola 68000 family (again a great inspiration for NEx64T), which allows the use of a scaled index using, however, the PC (RIP, in x64 jargon) as the base register.

Thus it allows more complex structures (vectors, for example) that are at some distance from the code that is referencing them to be addressed, without requiring that the base address first be loaded onto a register (as would be the case with x64. With x86, however, it is possible to specify a 32-bit offset that, therefore, covers the entire address space).

In this way, it is possible to implement the very useful table of function pointers (complex switch/case constructs, emulators, virtual machines, …) very easily, directly, quickly, and without even dirtying a register (by introducing, among other things, a dependency in the pipeline).

The other four modes that follow are pre-increment, pre-decrement, post-increment, and post-decrement. All four make use of a base register, to which a constant value (this is, in the first two cases) is added or subtracted (in the first two cases) before reading or writing the data to the referenced memory location, or this value is added or subtracted only after the read or write (thus in the last two cases).

A very common use-case for a processor is to perform operations on blocks of memory (vectors of integers, floating-point values, pointers, data structures having a fixed size), which are scanned from the beginning (first element) to the end (last element), or vice versa.

Such code is often implemented making use of the aforementioned indexed and scaled addressing mode, but only for architectures that have one available. Failing that, pointers must be used: the address of the first element (or the last, if it is to be scrolled in reverse) is computed, processed, then updated to make it point to the next element, and so on.

Sometimes it is preferred to use pointers even if an architecture has scaled indexed mode available, because perhaps the code that is generated is more compact and/or faster.

Whatever the reason is, this involves executing special pointer update instructions each time an element has been processed. Also, if multiple data structures such as these are being manipulated at the same time, more pointers are needed (ideally and thinking about the general case, one for each structure that is to be read, and one for each structure that is to be written) and, therefore, more instructions for updating them.

The aforementioned four new modes help enormously in these rather common scenarios, as they allow pointer update operations to be performed automatically following the reading or writing of the specific element, achieving significant benefits both in terms of code density (the same instruction does everything) and performance (fewer instructions executed).

Some processors, such as the aforementioned 68000, support all or some of these modes, but are limited only to increasing or decreasing only the size of the read or written data (called unit stride in the jargon). Others, such as ARM for example, allow you to specify a small immediate value (called data stride in jargon), so they are a bit more general.

NEx64T is far ahead in this respect, because it offers much more flexibility: in addition to the unit stride, it allows you to specify a data stride with immediate values of a certain size (up to 32 bits), thus covering many more cases and allowing you to replace more pointer update instructions.

The addressing mode labeled “Embedded & more” does not have such general utility. It is for more niche markets/use cases, such as embedded, precisely, or for working on certain system aspects; there are also some ideas for exploiting it on a more general level. In any case, I prefer not to release further details at the moment, so as not to uncover too many cards: suffice it to say that there is more to it than what is normally found when it comes to addressing modes for a processor.

“Compressed” addressing modes

Finally, the one defined as “Compressed TLS” represents a simplification/speedup of the mechanism of operation of the so-called Thread-Local Storage (TLS, in short) that on x86 and x64 is implemented with the infamous prefixes (FS is used in 32-bit code and GS in 64-bit code).

In this case, the operation of an instruction that references a data item in memory is altered thanks to the appropriate prefix, which instructs the processor to add an additional value to the address of the memory location, which corresponds to the “base” for the particular segment selected.

Nothing transcendental, then, other than the use of the arcane prefix, which obviously makes the instruction that makes use of it lengthen. For this purpose, and since in real application code one finds its use not infrequently, I thought it more effective to define a special mode that would make it immediately usable and without requiring any prefix and longer instructions.

The difference, compared to x86 and x64, is that it now turns out to be possible to use TLS even with instructions that reference multiple operands in memory (as we have already seen in a previous article). So more freedom and flexibility, plus shorter (and less complex to decode) instructions.

This new mode, however, only works when referencing an absolute address in memory (which will actually just be an offset from the base address of the specified segment). Otherwise (so for all other addressing modes) it is necessary to use a longer encoding (which, however, I have not encountered in the disassembled code so far).

Again for the benefit of the plurality of code density, it should be added that NEx64T uses several “compressed” versions of some of the most widely used addressing modes (e.g., the very famous base + offset, with base represented by any register) with the explicit goal of reducing instruction length.

x86 and x64, for example, allow the use of offsets of only 8 bits, in addition to 32-bit offsets, or to suppress the offset altogether. Other architectures have similar structures in the instructions that allow memory access.

It is for this reason that NEx64T incorporates some variety of them, which allowed us to compact the code even more and achieve the (preliminary) results that have already been presented and extensively discussed in past articles.

“Wide” addressing modes

It may seem paradoxical, but counterbalancing such short versions is also the possibility of using certain modes to be able to address memory locations that go far beyond the canonical 32-bit/4GB addressable memory, and by that I am referring explicitly to the 64-bit execution mode.

In fact, if 64-bit architectures came into being, it was primarily to be able to address much more memory than a 32-bit system would allow (albeit with such pasties as PAE, which was added specifically to exceed the usual 4GB of physically addressable memory).

The problem arises, however, of how to get to such distant portions of code or data. One immediate solution is to be able to define an absolute 64-bit addressing mode, so that the entire new address space can be covered. It is easily understood, however, that the instructions will become particularly long, to the detriment of code density and, therefore, performance.

Another solution is to delegate only to some special load/store instructions this possibility, and this is what x64 does, where there is a version of the MOV instruction that allows you to read or write data from/to any memory address, using the accumulator (the AL/AX/EAX/RAX register).

NEx64T also supports this instruction, generalizing it with the possibility of being able to specify any register rather than just the accumulator. It remains, however, that these instructions are particularly long (9 or 10 bytes for x64; 11 for Intel’s new APX extension/architecture. 10 for NEx64T).

In an attempt to mitigate the need for such instructions, NEx64T allows the canonical 32-bit offset to be exceeded for some precise use addressing modes, but without using additional space in the instruction opcodes. Which allows addressing memory up to about 1TB “distance” in some very common cases, including pieces of code.

I should add, as a complement, that jump and subprogram call instructions are available in some more compact versions, but the longest of which allow 1TB of “distance” to be reached in any case, not substantially impacting code density.

In this regard I emphasize how NEx64T has no “long” jump instruction, capable of reaching any memory location in the 64-bit address space, as Intel introduced with the aforementioned APX. The reason is quite simple: since it can already perform jumps and calls to routines with absolute addresses on the order of TB, this requirement is dropped.

Why it is so important to be able to access code or data far from where it is referenced is, finally, well illustrated in a very interesting article that covers all the code/data “models” that are usable on x64 (as many as six: small, medium, large. All three available in absolute or PC-related versions).

Most probably the small and medium models could be grouped together, thanks to the innovations introduced by NEx64T and explained above, to the benefit of both developers and performance.

Immediate values

Last, but absolutely not least, new functionality added by this new architecture is that of being able to specify immediate values of a certain size (which for simplicity I will call tiny, small and medium, without indicating precisely how much information they are capable of carrying / encoding).

We know that x86 and x64 already have instructions that are capable of specifying immediate values of certain sizes, and NEx64T, having to be fully compatible at the assembly source level, is also capable of doing so (but allowing even immediates of as many as 64 bits to be used in these cases, while x64 stops at only 32. Here, then, is an additional advantage!).

The novelty of which I speak, however, is of an entirely different profile and pertains to the ability to be able to specify immediate values in any instruction that is capable of referencing memory locations and with the addressing modes already discussed at the opening of the article.

In this respect it is comparable to a new addressing mode, except that instead of referencing a memory location in order to read its contents and then supply it to the instruction, that value is already immediately available and usable, similar to what Motorola’s fabulous 68000s (which I will never tire of mentioning) have allowed for decades.

This functionality is extremely important, as its beneficial benefits are manifold, falling in some particularly strategic and fundamental areas for a processor:

- less instruction length (thus advantages in overcited code density);

- reduction in the number of data used (because they are removed from the data section, being already directly present in the instructions that use them. In essence, the offsets used to be able to load data from that section are replaced with the data itself);

- reducing the number of memory accesses (to read that data).

The impact of this falls, therefore, on both the code and data caches and, consequently, on the entire memory hierarchy, performance, and processor consumption.

As a corollary, it needs to be pointed out that these immediate values encode information differently, depending on the type of instruction that uses them. They will be:

- integer values (with or without sign) for general-purpose / “integer” / “scalar” instructions, or for those of the x87 FPU or SIMD / vector unit that process integer values;

- floating-point values for FPU/SIMD/vector instructions that process values of this type;

- predefined constants for some very special integer (256, for example) or floating-point (pi, for example) numbers.

In all these cases, “shorter” values can be used regardless of the size of the data the instruction needs to process. If, for example, you are summing 64-bit data but the immediate value you need requires fewer bits to be specified, then you can use a shorter version of it from among the three available (tiny, small, or medium).

Similarly, for floating-point values one may well use an immediate value from among those three available formats and choose the one that succeeds in expressing the same number (or comes very close) even though the instruction in question is performing a 128-bit sum (FP128).

Dulcis in fundo, the immediate value (integer or floating point) is automatically duplicated on all the elements to be processed when using “packed” SIMD instructions or the vector ones, thus not requiring the execution of special instructions (called broadcast or splat, in the jargon) and, therefore, the use of an intermediate register to be used for the operation (which usually involves introducing a dependency in the processor pipeline).

Conclusions

The time has come to stop, because there was so much meat on the fire, but it was also very important to be able to illustrate the many as well as very useful innovations of this new architecture.

Unfortunately, it has not been possible to take advantage of most of them (especially for immediate values), since we need special compilers that are able to use them when needed.

I am, however, firmly convinced that thanks to them the trend, already briefly illustrated in the graphs given in previous articles, will be destined to markedly improve results in terms of code density, number of instructions executed, and reduction of data sections.

Since this has already been discussed on several occasions, the next article will focus on the “legacy” aspects of NEx64T, i.e., how it was possible to introduce a completely different structure of opcodes compared to x86 and x64, while remaining totally compatible at the assembly source level and, therefore, having to support quite a bit of ballast that these two venerable architectures carry.